Molmo AI: Revolutionising Open-Source Multimodal Intelligence

Solutions for Text and Visual Intelligence

Introduction

Molmo AI on PixMo Dataset is a state-of-the-art, open-source multimodal model developed by the Allen Institute for AI (Ai2). Designed to rival proprietary models like GPT-4 and Claude, Molmo offers powerful image and text comprehension capabilities at a fraction of the cost and complexity of its competitors. This revolutionary model is not only accessible and free but also showcases that open-source AI can match or even surpass closed, proprietary solutions in key benchmarks.

Molmo’s strengths lie in its ability to understand, process, and generate insights from both text and visual data, making it an ideal choice for a variety of applications, from AI-powered web agents to complex robotics systems. It has four models- Molmo-72B, Molmo-7B-D, Molmo-7B-o, MolmoE-1B.

Highlights of Molmo AI

- Multimodal Understanding

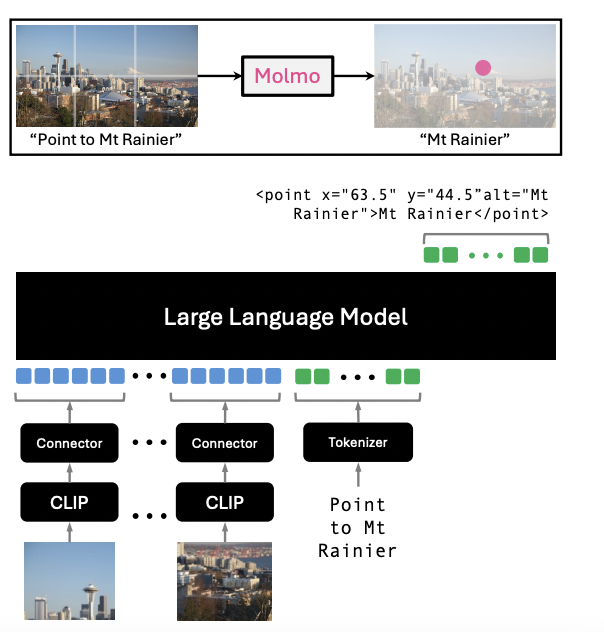

Molmo stands out for its ability to handle both textual and visual data within a single model. It can interpret images with remarkable accuracy, recognise objects, read charts, and even interact with user interface (UI) elements. This makes Molmo highly valuable for tasks such as automating web navigation, performing complex data analysis, and developing AI systems that can “see” and understand their environment. - Efficient Data Utilisation

Unlike large proprietary models that often rely on vast amounts of training data, Molmo is trained on a carefully curated dataset of just 600,000 images. This ensures high-quality, conversational descriptions while keeping the model efficient and faster to train. As a result, Molmo AI can perform complex tasks like object counting and emotional recognition with precision, even on smaller datasets. - Open-Source Flexibility

One of the most significant advantages of Molmo AI is its open-source nature. Developers and researchers can access the model weights, code, and training data freely, which promotes innovation across industries. This transparency is a game-changer, allowing users to customise the model for specific use cases without the financial or technical constraints typically associated with proprietary AI solutions. - Low Resource Requirements

Molmo AI is designed to be efficient, running on relatively low-powered hardware without sacrificing performance. While proprietary models often demand vast computational resources, Molmo’s optimised architecture allows even the smaller 1B model to perform effectively on typical devices, making advanced AI accessible to a broader audience.

Key Features and Capabilities

Molmo specialises in visual understanding, demonstrating impressive real-time image analysis capabilities. Its open-source nature allows for greater transparency and customisation compared to closed proprietary models.

Molmo has potential capabilities in the below domains:

- Computer vision tasks

- Image recognition and classification

- Visual question answering

- Multimodal AI research and development

Use Cases for Molmo AI

- Web Agents and Automation

Molmo AI’s ability to recognise and interact with UI elements makes it an ideal tool for developing web agents that can autonomously navigate websites, extract data, and even make decisions based on visual inputs. This is particularly useful in sectors like e-commerce, customer service, and digital marketing. - Robotics

In robotics, Molmo’s visual understanding capabilities can be applied to guide robots in navigating environments, identifying objects, and performing tasks autonomously. Its image comprehension allows it to interact with real-world data, making it an asset in industries ranging from manufacturing to healthcare. - Content Generation and Analysis

Developers looking to integrate AI into content creation can leverage Molmo’s multimodal capabilities. From generating text based on image inputs to analysing visual content for specific patterns, Molmo can be adapted to a wide range of content-driven applications.

How Molmo Compares to Other AI Models

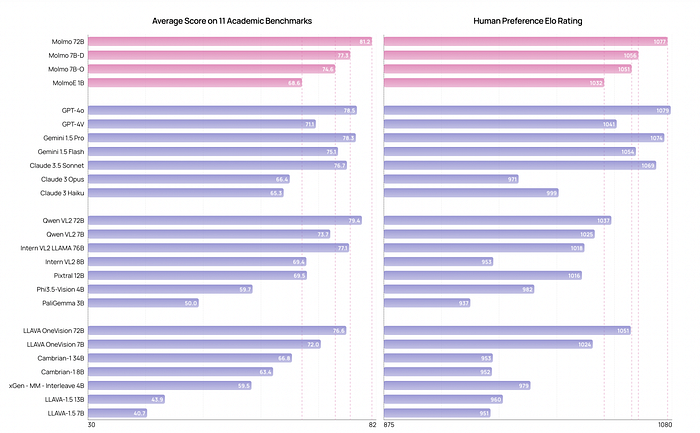

Molmo AI competes directly with leading closed-source models like GPT-4V and Gemini 1.5. While many proprietary models boast superior performance due to their vast datasets, Molmo’s streamlined and highly efficient data utilisation enables it to achieve similar outcomes with a fraction of the data. Furthermore, its open-source framework encourages community-driven improvements, ensuring that the model continues to evolve and stay competitive.

In benchmarks, Molmo has outperformed several commercial models on tasks involving both image and text comprehension, proving that an open-source approach can be just as powerful and cost-effective. The 72B parameter version of Molmo AI is particularly noteworthy for its ability to handle complex visual and language tasks, delivering results that match or even exceed those of its commercial counterparts. This is the most open-vision language model. Among them, the Molmo-7B-O model is the most open model.

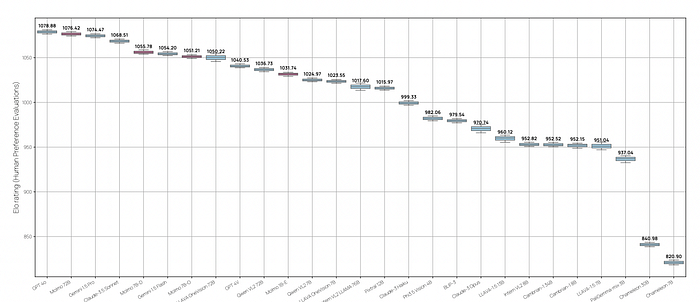

MolmoE-1B, based on the OLMoE-1B-7B mixture-of-experts LLM, nearly matches the performance of GPT-4V on both academic benchmarks and user preference. Molmo-7B-O and Molmo-7B-D, based on OLMo-7B and Qwen2 7B, respectively, perform comfortably between GPT-4V and GPT-4o on both academic benchmarks and user preference. Our best-in-class Molmo-72B model, based on Qwen2 72B, achieves the highest academic benchmark score and ranks second by human preference, just behind GPT-4o. Our best model outperforms many state-of-the-art proprietary systems, including Gemini 1.5 Pro and Flash, and Claude 3.5 Sonnet.

After training for captioning, it is fine-tuned with all model parameters on a mixture of supervised training data. This mixture includes common academic datasets and several new PixMo datasets, like PixMo-AskModelAnything, PixMo-Docs, PixMo-Points, PixMo-Clocks, PixMo-CapQA, and Academic datasets. Therefore it can point to objects as well.

You can read more details from its academic paper

How to Get Started with Molmo AI

Using Molmo AI is simple and does not require extensive technical knowledge. You can start by visiting the Molmo AI dashboard, where you can upload images, test the model’s capabilities, and explore various use cases. Molmo’s documentation provides extensive guidance for developers looking to integrate it into their projects, offering flexibility whether you’re building small-scale applications or large AI-driven systems.

For those with more advanced technical requirements, the full source code, model weights, and datasets are available for download. This open access fosters a collaborative community where users can contribute to the ongoing development of the model, ensuring that Molmo remains at the forefront of AI innovation.

Testing with Molmo AI Online

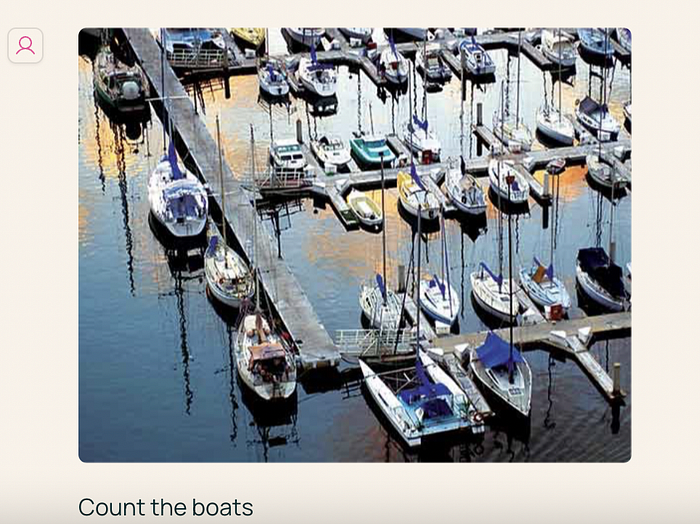

Test 1:

Checking the same prompt “Count the number of boats” in Llama 3.2 in the online chatbot.

We can see that Molmo performed well in this use case since there are about 35 boats.

Test 2:

Checking with the same picture and same prompt “Who is this person” in Llama 3.2 in the online chatbot.

We can see that Molmo can correctly recognise the person.

Test 3:

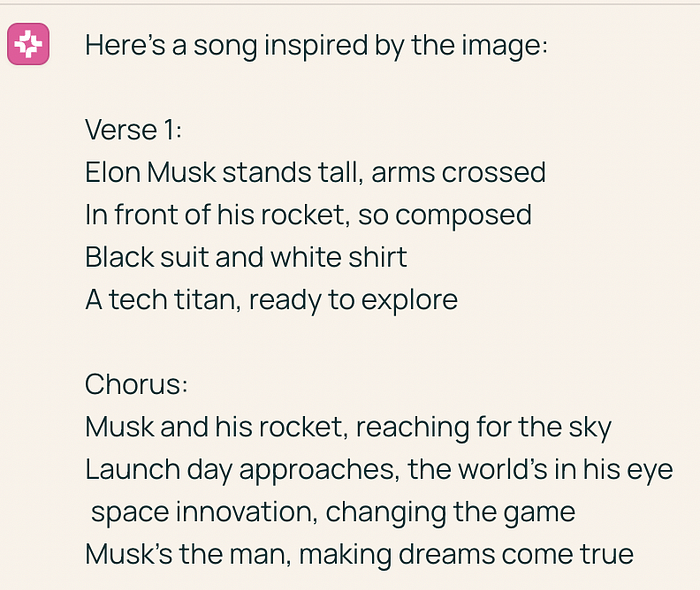

Gave the same image of Elon Musk and asked to create a song.

Output by Molmo:

Checking with the same picture and the same prompt “Create a song out of this image” in Llama 3.2 in the online chatbot.

Molmo successfully created the song.

Comparing Molmo AI to GPT-4 and Claude

Molmo offers several unique features that differentiate it from models like GPT-4 and Claude:

- Open-Source Accessibility

Unlike GPT-4 and Claude, which are proprietary models, Molmo is fully open-source. This allows researchers and developers to freely access, modify, and build upon the model’s architecture and training data. - Efficient Training

Molmo achieves high performance while using significantly less training data than its proprietary counterparts. According to Ai2, Molmo uses “1000x less data” than models like GPT-4 and Claude, thanks to innovative training techniques. - Multimodal Capabilities

While GPT-4 and some versions of Claude have multimodal abilities, Molmo specialises in visual understanding. It can process and analyse images in real time, making it particularly strong for visual tasks. - Benchmark Performance

Molmo has shown impressive results on several third-party benchmarks:

— Molmo-72B outperforms GPT-4, Claude 3.5 Sonnet, and Gemini 1.5 on certain visual question-answering tasks like DocVQA and TextVQA.

— It achieves the highest score on 11 key benchmarks and ranks second in user preference, closely following GPT-4.

- Specialised Visual Grounding

Molmo incorporates pointing data, which enhances its ability to provide visual explanations and interact with physical environments. This feature is particularly valuable for robotics applications. - Flexible Architecture

Molmo offers multiple model sizes and variants, allowing users to choose the best fit for their specific needs. Options range from the 1 billion parameter MolmoE-1B to the 72 billion parameter Molmo-72B. - Permissive Licensing

All Molmo models are available under Apache 2.0 licenses, enabling a wide range of research and commercial applications without the restrictions often associated with proprietary models.

Exploring different Molmo Models:

Molmo-72B stands out as the flagship model in the Molmo family, offering several key advantages over its smaller counterparts. Here’s a comparison of the different Molmo versions:

Molmo-72B (Flagship Model)

Parameters: 72 billion. Base Model: Alibaba Cloud’s Qwen2–72B Performance: Outperforms GPT-4, Claude 3.5 Sonnet, and Gemini 1.5 on certain benchmarks. Capabilities: Highest overall performance in the Molmo family

Molmo-7B-D (Demo Model)

Parameters: 7 billion. Base Model: Alibaba’s Qwen2–7B Purpose: Designed for demonstration and wider accessibility

Molmo-7B-O

Parameters: 7 billion. Base Model: Ai2’s OLMo-7B Distinction: Uses Ai2’s own base model, potentially offering unique capabilities

MolmoE-1B

Parameters: 1 billion active parameters (based on a 7B mixture-of-experts model). Base Model: OLMoE-1B-7B mixture-of-experts LLM Notable Feature: Nearly matches GPT-4V performance on some benchmarks despite its smaller size.

Why Molmo-72B is the Flagship

- Superior Performance: Molmo-72B achieves the highest scores on benchmarks, outperforming even some proprietary models.

- Largest Capacity: With 72 billion parameters, it has the most extensive knowledge and capability among Molmo models.

- Benchmark Leader: It ranks highest on 11 key benchmarks and second in user preference, closely following GPT-41.

- Visual Task Excellence: Particularly strong in visual question-answering tasks like DocVQA and TextVQA1.

- Comprehensive Abilities: Offers the most well-rounded performance across various tasks and domains.

The other Molmo versions serve important roles:

Molmo-7B models offer a balance of performance and efficiency for less resource-intensive applications. MolmoE-1B demonstrates impressive capabilities in a much smaller package, potentially suitable for mobile or edge devices.

Conclusion

In conclusion, Molmo, particularly the flagship Molmo-72B model, represents a significant leap forward in open-source multimodal AI. By achieving performance that rivals or even surpasses proprietary models like GPT-4, Claude, and Gemini on several key benchmarks, Molmo is closing the gap between open and closed AI systems. Its unique features, such as visual grounding capabilities and efficient training on high-quality data, set it apart in the AI landscape. The open-source nature of Molmo, combined with its impressive performance and innovative approach to data efficiency, positions it as a powerful tool for researchers, developers, and businesses alike. As the AI community continues to build upon and refine Molmo, it has the potential to democratise access to advanced multimodal AI capabilities, fostering innovation and pushing the boundaries of what’s possible in artificial intelligence.

References:

- Allen Institute for AI, “Molmo-7B-D-0924,” Hugging Face. [Online]. Available: https://huggingface.co/allenai/Molmo-7B-D-0924

- S. Hong, K. Park, and S. J. Oh, “Molmo: Molecular modeling with diffusion models,” Allen Institute for AI, Sep. 2023. [Online]. Available: https://molmo.allenai.org/paper.pdf

Catch the latest version of this article over on Medium.com. Hit the button below to join our readers there.