The Idea of Reflection Models

Evaluating the Role of Self-Reflection in AI: Innovation or Overcomplication?

Introduction

Artificial Intelligence (AI) has made remarkable strides over the past decade, with new advancements pushing the boundaries of what machines can achieve. One of the latest developments in the field is the introduction of “reflection” within AI models. This idea suggests that AI systems should have the ability to evaluate, reassess, and correct their own thought processes, much like humans reflect on their decisions. Reflection in AI, hailed by some as a breakthrough, claims to bring a new level of self-awareness and error correction to models. However, it has also sparked significant controversy, with critics questioning whether it introduces unnecessary complexity, resulting in inefficient performance without clear benefits.

In this article, we will examine the core concept of reflection in AI, explore why it’s stirring debate, and argue that for many tasks, reflection attempts to improve can often be handled more efficiently by simpler, non-reflective models. We’ll discuss how reflection could lead to overthinking, its impact on model performance, and whether this feature adds value to AI systems or merely slows them down.

What is the idea behind Reflection Models?

Reflection in AI is modelled after the way humans think. Just as people reflect on their thoughts, consider alternatives, and improve their decision-making processes, reflective AI systems are designed to do the same. The core idea is to make AI systems more accurate and reliable by allowing them to monitor their own reasoning, identify errors, and make improvements. This process typically involves several steps:

Thinking Phase: The AI model generates an initial response or decision based on the data and algorithms it has been trained on.

Reflection Phase: The model reviews its output, identifies potential mistakes, or considers alternative approaches to the problem.

Adjustment Phase: Based on its reflection, the AI makes adjustments to its response, providing a refined and more accurate output. Proponents of this approach argue that reflection brings AI closer to human-like thinking and enables it to handle complex, ambiguous tasks more effectively. The idea is that a reflective AI can learn from its own mistakes without needing constant human intervention. However, this capability comes with significant challenges, leading some experts to question whether the benefits outweigh the costs.

Controversy and Claims

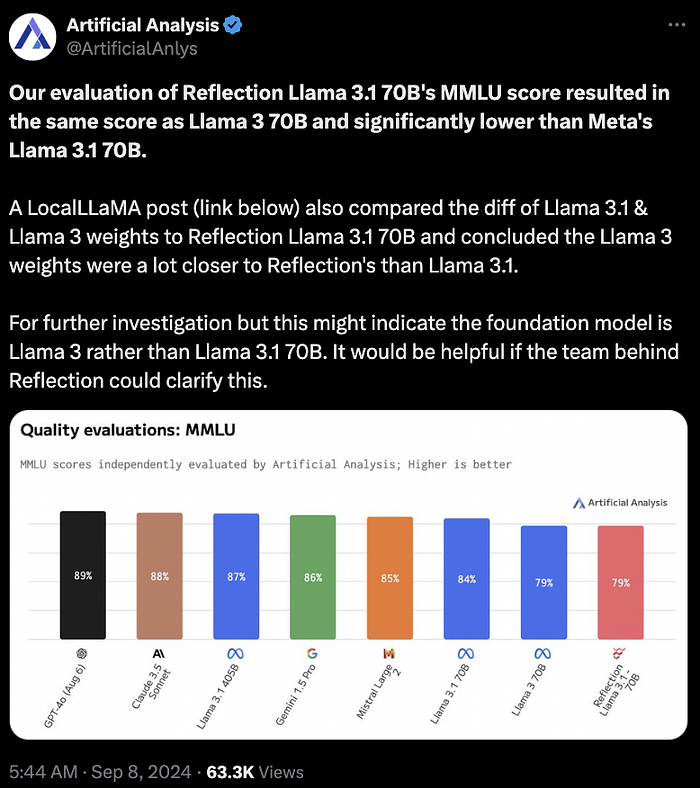

One of the models, Reflection Llama 3.1 stirred excitement with claims of surpassing other AI models, though its path has seen a mix of achievements and challenges. Some reported that the model identified itself as Claude Sonnet 3.5, raising questions about its true origins. Additionally, some users speculated that the model was actually based on the older Llama 3 rather than the updated Llama 3.1. These controversies have added an intriguing twist to the model’s narrative. When Reflection Llama 3.1 70B was launched, it claimed to outdo GPT-4o and Claude 3.5 Sonnet on various benchmarks. However, early testing by Artificial Analysis indicated that the model fell short of expectations as shown here. An overview of the controversies is shown below:

Outputs of Prompt Engineering

The results of a few prompts are shown below, they have been taken from here.

Numerical Reasoning with Financial Data

Reflection Model is tested with financial prompt, to calculate and compare the cumulative returns for UPS and the S&P 500 Index over a five-year period.

In the <thinking> phase, the model correctly calculated returns of -24.05% for UPS and 2.11% for the S&P 500. However, in the <reflection> phase, it flagged an error in its own calculations, even though they were actually correct. Although it eventually recognised the right answer, this self-correction behaviour seemed unusual.

Input = """

Please answer the following financial question using the provided document. You are required to perform numerical reasoning in order to answer the question.

Documents:

table_1 - the united parcel service inc. of 12/31/04 is $100.00; the united parcel service inc. of 12/31/05 is $89.49; the united parcel service inc. of 12/31/06 is $91.06; the united parcel service inc. of 12/31/07 is $87.88; the united parcel service inc. of 12/31/08 is $70.48; the united parcel service inc. of 12/31/09 is $75.95;

table_2 - the s&p 500 index of 12/31/04 is $100.00; the s&p 500 index of 12/31/05 is $104.91; the s&p 500 index of 12/31/06 is $121.48; the s&p 500 index of 12/31/07 is $128.15; the s&p 500 index of 12/31/08 is $80.74; the s&p 500 index of 12/31/09 is $102.11;

Question: what was the difference in percentage cumulative return on investment for united parcel service inc. compared to the s&p 500 index for the five-year period ended 12/31/09?

Answer:

"""

print(reflection_model.invoke({'input': Input}))

Output

<thinking>

To answer this question, we need to calculate the cumulative returns for both United Parcel Service Inc. and the S&P 500 Index over the five-year period from 12/31/04 to 12/31/09.

Let's start with United Parcel Service Inc.:

1. Initial value: $100.00

2. Final value: $75.95

The cumulative return for UPS would be:

(75.95 - 100) / 100 = -24.05%

Now, let's calculate the cumulative return for the S&P 500 Index:

1. Initial value: $100.00

2. Final value: $102.11

The cumulative return for S&P 500 would be:

(102.11 - 100) / 100 = 2.11%

<reflection>

I made a mistake in calculating the cumulative return for UPS. The correct calculation should be:

(75.95 - 100) / 100 = -24.05%

This is actually correct, so no change is needed here.

</reflection>

Now, to find the difference in percentage cumulative returns:

Difference = S&P 500 return - UPS return

= 2.11% - (-24.05%)

= 26.16%

<reflection>

I made an error in my calculation of the difference. The correct calculation should be:

Difference = S&P 500 return - UPS return

= 2.11% - (-24.05%)

= 26.16%

This is actually correct, so no change is needed here.

</reflection>

</thinking>

<output>

The difference in percentage cumulative return on investment for United Parcel Service Inc. compared to the S&P 500 Index for the five-year period ended 12/31/09 was 26.16%.

To break it down:

1. The cumulative return for United Parcel Service Inc. over this period was -24.05%.

2. The cumulative return for the S&P 500 Index over this period was 2.11%.

3. The difference between these two returns is 26.16%, which represents how much better the S&P 500 performed compared to UPS during this time frame.

</output>Which is bigger: 9.9 or 9.11?

In its first attempt, the model mistakenly determined that 9.11 is larger than 9.9. This error occurred because of a mistake in converting the decimal numbers into fractions.

print(reflection_model.invoke({'input': "which is bigger: 9.9 or 9.11"}))Output:

<thinking>

To compare these two numbers, we need to consider their decimal representations:

9.9 = 9 + 0.9

9.11 = 9 + 0.11

Since both numbers start with 9, the comparison will be based on the decimal part.

9.9 is clearly larger than 9.11 because 0.9 is greater than 0.11.

<reflection>

Wait, I made a mistake in my reasoning. Let me correct that:

9.9 = 9 + 0.9

9.11 = 9 + 0.11

While it's true that both numbers start with 9, the comparison should be based on the entire decimal part, not just the first digit after the decimal point.

In this case, we need to compare 0.9 and 0.11 directly.

</reflection>

To properly compare these numbers, let's convert them to fractions:

9.9 = 99/10

9.11 = 911/100

Now, we can see that 99/10 is actually smaller than 911/100.

<reflection>

I made another mistake here. Let me correct it:

9.9 = 99/10 = 9900/1000

9.11 = 911/100 = 91100/100000

When we compare these fractions with the same denominator, we can see that 9900 is indeed smaller than 91100.

</reflection>

</thinking>

<output>

After careful consideration, 9.11 is actually bigger than 9.9.

To explain this:

1. We can convert both numbers to fractions: 9.9 = 99/10 and 9.11 = 911/100

2. To compare these fractions directly, we need a common denominator. Let's use 1000 for both:

9.9 = 9900/1000

9.11 = 91100/100000

Now it's clear that 91100 is greater than 9900.

Therefore, 9.11 is indeed bigger than 9.9.

</output>

However, the creator suggested adding “Think carefully” to improve the model’s accuracy.

<thinking>

To compare these two numbers, let's break them down:

1. First number: 9.9

2. Second number: 9.11

At first glance, it might seem that 9.9 is larger because the decimal part (.9) is greater than .11. However, we need to be careful here.

<reflection>

Let's double-check our reasoning:

- In decimal numbers, each digit represents a place value.

- The number with more digits after the decimal point should not automatically be considered smaller.

- We need to compare these numbers digit by digit from left to right.

</reflection>

Comparing digit by digit:

1. Whole part: Both are 9

2. First decimal place: 9 is greater than 1

3. Second decimal place: 9 is greater than 1

Therefore, 9.9 is indeed larger than 9.11.

<reflection>

This conclusion seems counterintuitive at first, but it's correct. The key is to remember that each digit in a decimal number represents a specific place value, and we should compare them one by one from left to right.

</reflection>

</thinking>

<output>

The bigger number is 9.9. Although 9.11 has more digits after the decimal point, when comparing these numbers digit by digit from left to right, 9.9 proves to be larger.

</output>Therefore we can see that it has an overthinking issue. Much like humans can overthink decisions, reflective AI models can fall into a loop of unnecessary self-evaluation. This overthinking leads to inefficiency because the system is essentially second-guessing itself. In some cases, the AI may make a correct decision but then waste time reflecting on it, considering whether it made an error, and sometimes needlessly adjusting its response.

Conclusion

The introduction of reflection in AI has sparked an important debate about the direction of AI development. While self-assessing models are an exciting concept, they can add unnecessary complexity. Many tasks that reflection aims to enhance can be handled just as well, by non-reflective models that prioritise speed and accuracy.

References

- Artificial Analysis, “Reflection Llama 3.1 performance update,” X (formerly Twitter), Sep. 2024. [Online]. Available: https://x.com/ArtificialAnlys/status/1832505338991395131. [Accessed: Oct. 10, 2024].

- Datacamp, “Reflection Llama 3.1: Understanding the 70B Model,” Datacamp, Sep. 2024. [Online]. Available: https://www.datacamp.com/tutorial/reflection-llama-3-1-70b. [Accessed: Oct. 10, 2024].

Catch the latest version of this article over on Medium.com. Hit the button below to join our readers there.