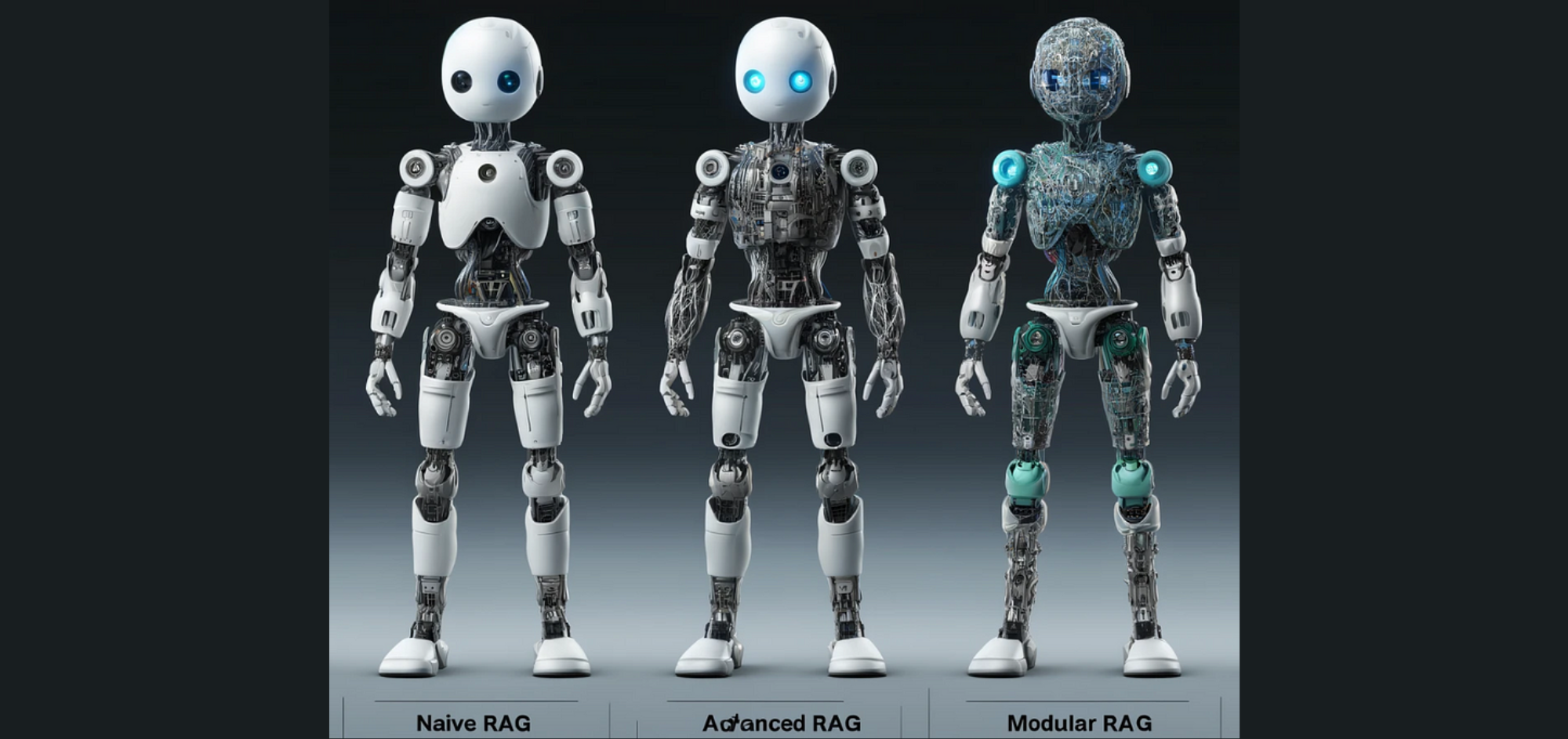

Three Paradigms of RAG

From Naive to Modular: Tracing the Evolution of Retrieval-Augmented Generation

Introduction

Large Language Models (LLMs) have achieved remarkable success. But, they still face significant limitations, especially in domain-specific or knowledge-intensive tasks such as question answering, producing “hallucinations” where the models generate responses that sound plausible but are actually incorrect when handling queries beyond their training data or requiring current information.

To overcome these challenges, Retrieval-Augmented Generation (RAG) enhances LLMs by retrieving relevant document chunks from external knowledge bases through semantic similarity calculation. By referencing external knowledge, RAG effectively reduces the problem of generating factually incorrect content. Its integration into LLMs has resulted in widespread adoption, establishing RAG as a key technology not just in advancing chatbots, but also in vision language models to support generating responses for real-world applications.

RAG instance when applied to question-answering tasks follows this process:

The diagram consists of three main steps. First, documents are divided into smaller parts, converted into vectors, and stored in a database. Second, the system selects the top k most relevant chunks based on the question’s meaning. Third, the original question and selected chunks are inputted into a large language model to produce the answer.

RAG technology has rapidly developed in recent years, and the technology tree summarizing related research is shown above in Figure 2. Initially, RAGs emerged with Transformer architecture, focusing on enhancing language models by incorporating additional knowledge through PreTraining Models (PTM). This early stage was characterized by foundational work aimed at refining pre-training techniques. The subsequent arrival of ChatGPT marked a pivotal moment, with LLMs demonstrating powerful in-context learning (ICL) capabilities. As research progressed, the enhancement of RAG was no longer limited to the inference stage but began to be incorporated more with LLM fine-tuning techniques.

In this article, we review the state-of-the-art RAG method and describe its evolution through paradigms including Naive RAG, Advanced RAG, and Modular RAG.

Naive RAG

This is the earliest method of RAG to get prominence shortly after the advent of ChatGPT. The Naive RAG follows a traditional process that includes indexing, retrieval, and generation.

Let’s understand Naïve RAG with an example.

Scenario: Suppose a user asks an LLM, “What are the health benefits of drinking green tea?”

Without Naive RAG: The LLM might generate a response based solely on its pre-trained knowledge, which could be outdated or incomplete. It might say, “Green tea is rich in antioxidants and can help in improving brain function.”

With Naive RAG:

1. Indexing: It begins by cleaning and extracting raw data about green tea from various formats like PDF, HTML, Word, and Markdown. This data is converted into plain text for consistency. To address the context limitations of language models, the text is divided into smaller, manageable chunks. These chunks are then turned into vectors using an embedding model and stored in a vector database. This step is important for enabling quick and accurate searches in the retrieval phase and providing up-to-date research and articles about green tea are indexed.

2. Retrieval: When the RAG system gets a user question, it uses the same encoding model as before to turn the question into a vector. Then, it compares this vector to the vectors of the indexed text chunks. The system picks the top K chunks that are most similar to the question. These chunks become the extra context for generating the response. For example: It retrieves closely related chunks to “health benefits of green tea.”

3. Generation: The user question and chosen documents are combined into a clear prompt. The model might answer differently based on specific task rules. It can use its built-in knowledge or stick only to the given documents. If it’s part of a conversation, previous chat history can be added to the prompt, helping the model engage better in multi-turn dialogues. For instance, it might retrieve a recent study stating, “Green tea consumption is linked to reduced risk of heart disease,” which wasn’t part of its initial training data.

However, Naive RAG encounters notable drawbacks:

Retrieval Challenges — that lead to the selection of misaligned or irrelevant chunks, and the missing of crucial information.

Generating Challenges — the model might struggle with hallucination and have problems with relevance, toxicity, or bias in its outputs, which can make the responses less reliable and of lower quality

For instance: The Naive RAG model retrieves relevant documents and provides them to the LLM. However, the retrieved information may not be relevant or up to date. “Recent studies suggest that new medications and therapies are being developed to slow the progression of Alzheimer’s.”

Advanced RAG

Advanced RAG introduces specific improvements to overcome the limitations of Naive RAG. Focusing on enhancing retrieval quality, it employs pre-retrieval and post-retrieval strategies.

Scenario: Consider a user asking, “What recent advances have been made in the treatment of Alzheimer’s disease?”

With Advanced RAG: The following procedures are followed:

Pre-retrieval process: The main aim is to improve the indexing structure and the user’s query. Indexing optimization focuses on making the indexed content better. This includes strategies like improving data details, organizing indexes better, adding extra information, aligning things correctly, and using mixed retrieval methods, where information from external sources is combined with the indexed content for retrieval purposes. Query optimization aims to make the user’s original query clearer and more suitable for retrieval. Common methods include query rewriting and query transformation & expansion.

For instance: Using the Alzheimer’s example, Advanced RAG use a refined indexing strategy where documents are not only indexed by content but also by recency and relevance to medical advancements, using metadata enhancements. Utilizing enhanced query transformation techniques, the system precisely retrieves the most current and relevant research papers discussing the latest treatment breakthroughs, rather than broader articles on Alzheimer’s.

Post-Retrieval Process: After retrieving relevant information, it is essential to effectively combine it with the original query. The main steps include re-ranking to highlight the most important content and compressing the context to avoid information overload as retrieved documents are then re-ranked to prioritize those that specifically mention recent clinical trials or FDA approvals, ensuring that the most pertinent information is considered first.

Context compression: Redundant or less relevant information is filtered out to focus on key findings from the most authoritative sources.

So, the result could be: “The latest breakthrough in Alzheimer’s treatment includes the development of a new drug, Aducanumab, which has shown potential in reducing cognitive decline in early-stage patients, according to a 2021 FDA report.”

Modular RAG

The modular RAG architecture advances beyond the former two RAG paradigms, offering enhanced adaptability and versatility. It incorporates diverse strategies for improving its components, such as adding a search module for similarity searches and refining the retriever through fine-tuning. Modular RAG builds upon the foundational principles of Advanced and Naive RAG, illustrating a progression and refinement within the RAG family.

Modules: The Modular RAG framework introduces additional specialized components to enhance retrieval and processing capabilities.

Consider the scenario: “What are the latest research findings on the health benefits of green tea?”

- Search Module: When the question is posed, this module activates and uses LLM-generated queries to search through various databases and knowledge graphs specifically for the latest research papers or articles on green tea.

- RAG-Fusion: This module takes the initial user query and expands it into multiple queries. For instance, it might generate additional queries like “recent clinical trials on green tea” and “green tea effects on mental health.” These expanded queries are then used to search for a wide range of relevant information.

- Memory Module: As articles and papers are retrieved, this module helps by recalling previously retrieved documents on similar topics to improve the relevance and depth of the current search, using the system’s built-in memory of past queries and their results.

- Routing: This module analyzes the incoming data and decides the best path for processing it. For example, it might route the query to specific scientific databases for academic papers rather than general websites.

- Predict Module: To ensure the retrieved information isn’t redundant and is relevant, this module generates a synthesized context from the retrieved data, focusing only on the most pertinent information like key findings or new insights about green tea.

- Task Adapter Module: Tailors RAG to specific tasks by automating prompt retrieval for zero-shot inputs and creating task-specific retrievers. For example, this module recognizes that the query is about health benefits, a specific knowledge domain. It adjusts the retrieval process to focus on the most credible medical and health-oriented sources and might generate specialized prompts to refine further retrieval.

Patterns

RAG uses various flexible and interchangeable modules to enhance the performance of LLMs as follows:

- Module Interaction and Adjustment: These are methods in Modular RAG that show how different parts (modules) of the system work together more dynamically. DSP (Demonstrate-Search-Predict) and ITERRETGEN (Iterative Retrieve-Read-Retrieve-Read) use the outputs from one module to improve or inform the function of another, enhancing the overall synergy and effectiveness. Example Patterns: DSP and ITERRETGEN

- Flexible Orchestration: These methods demonstrate the adaptability of Modular RAG, where the system can adjust its retrieval processes based on what’s needed for a specific scenario. This flexibility means the system isn’t stuck to a fixed way of retrieving information but can adapt based on the requirements of the task at hand. Example Techniques: FLARE and Self-RAG

- Integration with Other Technologies: Modular RAG can also easily work with other advanced technologies. For example, it can improve retrieval accuracy by fine-tuning the retrieval part of the system, personalize outputs by fine-tuning the generation process or even use reinforcement learning to enhance both processes collaboratively. Examples are Fine-Tuning and Reinforcement Learning:

Comparison of RAG with other model optimization methods

The diagram, presented as a quadrant chart, illustrates the differences based on two dimensions: “External Knowledge Required” and “Model Adaptation Required.”

- Prompt Engineering: Requires minimal model modifications and external knowledge, leveraging the inherent capabilities of LLMs.

- Fine-tuning (FT): Involves further training of the model to internalize knowledge, making it suitable for tasks that require specific structures, styles, or formats.

- RAG: RAG typically requires less training of the model itself compared to methods like Fine-tuning. Instead, RAG heavily utilizes external, updated knowledge sources to enhance the model’s output. This allows RAG to provide more accurate and current information by integrating the latest data from outside databases and texts, without the need for extensive retraining of the model.

Future Works

The key future developments highlighted for RAG are as follows:

Optimization of Retrieval Efficiency: Future research can focus on ways of accelerating the retrieval process more effectively for large knowledge bases. Optimization of indexing and retrieval of data can be done to reduce latency and increase the responsiveness to the maximum.

Multi-Modal RAG: Take RAG to the next level by making it work with not only text but other modalities like images, audio, and video. Plenty of innovations have come through for Multi-Modal RAG already, but more research in this area will always be helpful.

Operational and Production Efficiency: Demonstrate and enhance the practical deployment of RAG systems within the production environment with improved management of computational resources, and data security assured in commercial applications.

Conclusion

This article discusses RAG, a method that enhances LLMs by integrating external knowledge to improve response accuracy, especially for complex, real-world applications. RAG reduces factual inaccuracies by accessing up-to-date databases, supporting various RAG paradigms like Naive, Advanced, and Modular RAG, each offering specific improvements and flexibility for task-specific applications.

References

- Gao, Y., Xiong, Y., Gao, X., Jia, K., Pan, J., Bi, Y., Dai, Y., Sun, J., Wang, M., & Wang, H. (2023, December 18). Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv.org. https://arxiv.org/abs/2312.10997

- Peng, W., Li, G., Jiang, Y., Wang, Z., Ou, D., Zeng, X., Xu, D., Xu, T., & Chen, E. (2023, November 7). Large Language Model based Long-tail Query Rewriting in Taobao Search. arXiv.org. https://arxiv.org/abs/2311.03758

- Ma, X., Gong, Y., He, P., Zhao, H., & Duan, N. (2023, May 23). Query Rewriting for Retrieval-Augmented Large Language Models. arXiv.org. https://arxiv.org/abs/2305.14283

Catch the latest version of this article over on Medium.com. Hit the button below to join our readers there.