What is LM Studio?

Working Examples

Introduction

In the rapidly evolving landscape of artificial intelligence, Large Language Models (LLMs) have emerged as powerful tools capable of understanding and generating human-like text. While cloud-based services offer convenient access to these models, there’s a growing demand for local, hands-on experimentation. Enter LM Studio, a game-changing desktop application that brings the power of LLMs directly to your computer, offering a unique blend of accessibility and control for AI enthusiasts, researchers, and developers alike. LM Studio stands out as a comprehensive solution for those looking to explore the capabilities of LLMs without the constraints of cloud-based services. With its intuitive interface, support for a wide range of open-source models, and powerful features like local API endpoints, LM Studio is democratising access to cutting-edge AI technology.

In this article, we’ll dive deep into what LM Studio offers, providing working examples that showcase its versatility and ease of use. Whether you’re a curious beginner or a seasoned AI practitioner, LM Studio opens up new possibilities for innovation and learning in the exciting world of language models.

What is LM Studio?

LM Studio is a powerful desktop application designed to bring the capabilities of Large Language Models (LLMs) directly to your local machine. At its core, LM Studio serves as a comprehensive platform for downloading, running, and experimenting with a wide variety of open-source LLMs, such as Llama 3.1, Phi-3, and Gemma 2. This user-friendly tool bridges the gap between cloud-based AI services and local computing, allowing users to harness the power of advanced language models without relying on external APIs or internet connectivity.

The key features of LM Studio make it a versatile tool for AI exploration. It offers a familiar chat interface, reminiscent of popular AI chatbots, where users can interact with their chosen models in real time. The built-in model management system simplifies the process of discovering, downloading, and organising different LLMs. Perhaps most importantly, LM Studio includes a local server functionality that can listen on OpenAI-compatible endpoints, enabling seamless integration with existing tools and workflows that typically rely on cloud-based AI services. Designed with cross-platform compatibility in mind, LM Studio is available for macOS, Windows, and Linux operating systems. This broad support ensures that a wide range of users, from individual enthusiasts to professional developers, can leverage the power of local LLMs regardless of their preferred computing environment.

By offering a balance of user-friendly features for beginners and advanced capabilities for experienced users, LM Studio serves as a valuable tool for anyone looking to dive deeper into the world of large language models, whether for research, development, or personal exploration.

Getting Started with LM Studio

Getting started with LM Studio is a straightforward process that allows you to quickly begin experimenting with local LLMs. Before diving into the exciting world of AI models, you’ll need to set up the application on your machine. Let’s walk through the essential steps to get LM Studio up and running:

1. Check System Requirements

- Ensure your computer meets the minimum requirements

— For macOS: Apple Silicon (M1/M2/M3) chip, macOS 13.4 or newer, 16GB+ RAM recommended

— For Windows: x64 or ARM-based system, AVX2 instruction set support (for x64), 16GB+ RAM recommended

— For Linux: Ubuntu 20.04 or newer, x64 architecture

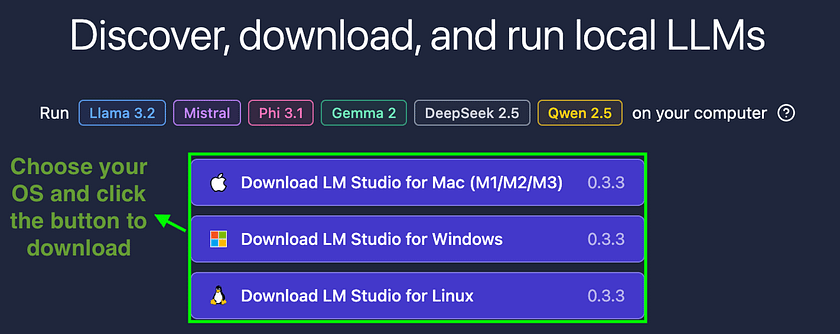

2. Download and Install LM Studio

- Visit the official LM Studio website

- Navigate to the Downloads page

- Select and download the appropriate version for your operating system

- Run the installer and follow the on-screen instructions to complete the installation

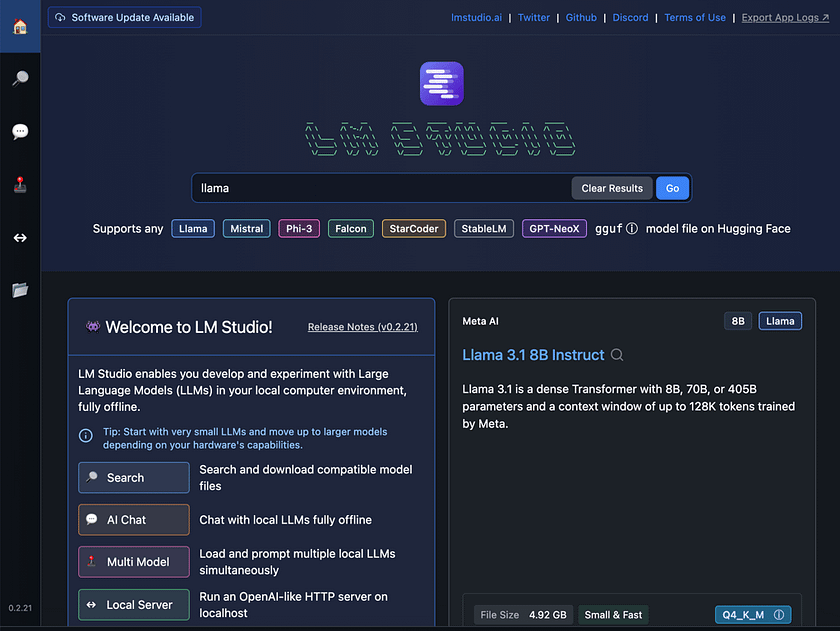

3. Launch LM Studio

- Open the installed LM Studio application

- You’ll be greeted with the main interface, featuring tabs for Chat, Discover, and My Models

Working Example 1: Chat with a Local LLM

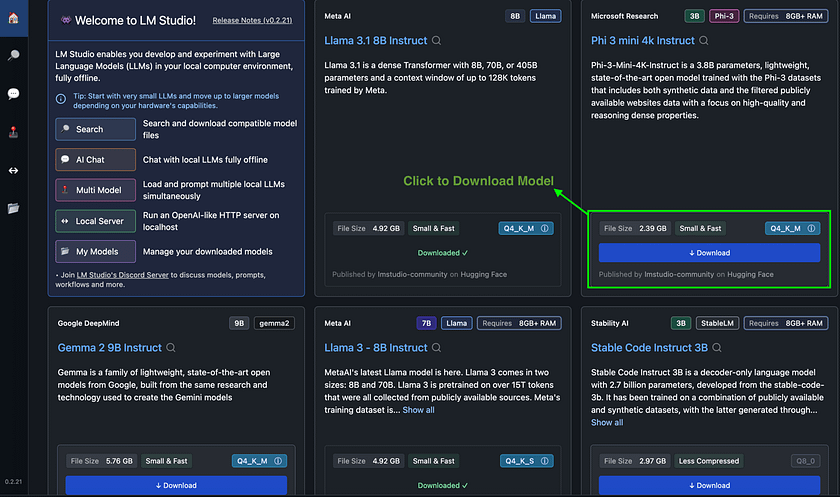

Now that you have LM Studio installed, let’s walk through the process of chatting with a local large language model. This example will demonstrate how to download a model, load it into memory, and engage in a conversation. We’ll use Phi 3 mini 4k Instruct as our example model, but the process is similar for other models available in LM Studio.

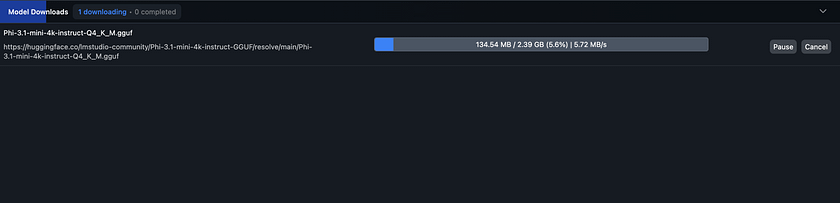

1. Download the Model

- Navigate to the Discover tab in LM Studio

- Search for "Phi 3 mini 4k Instruct" in the search bar

- Click the download button and wait for the process to complete

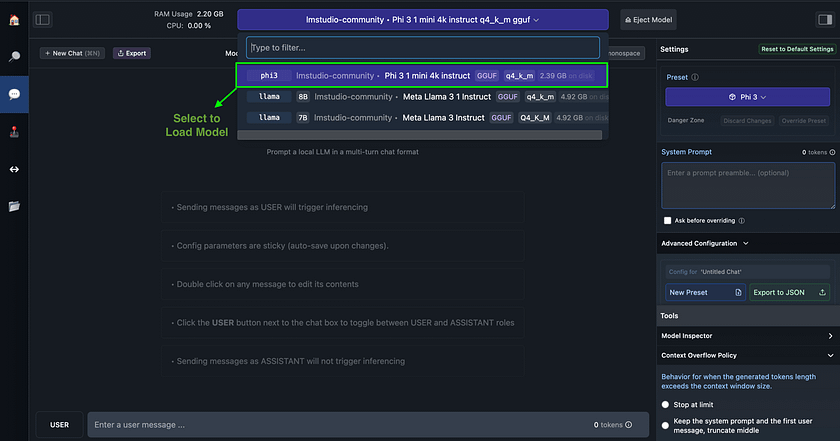

2. Load the Model

- Switch to the Chat tab

- Click on the model loader button (usually at the top of the interface)

- Select the downloaded Hermes 2 Pro Mistral 7B model from the list

- Wait for the model to load into memory (this may take a few moments)

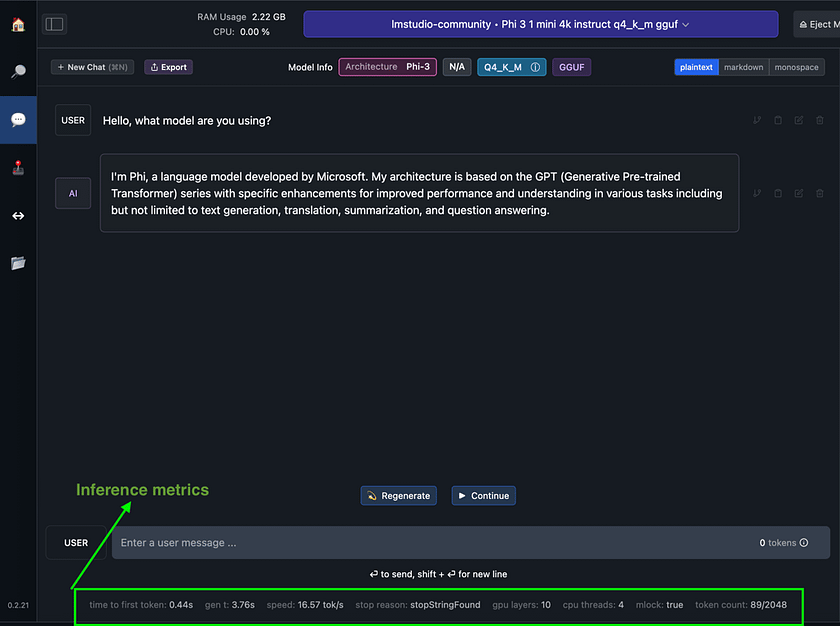

3. Start Your Chat

- Once loaded, you’ll see the chat interface

- Type your first message, e.g., “Hello, What model are you using?”

- Press enter and wait for the model’s response

- Continue the conversation by asking follow-up questions

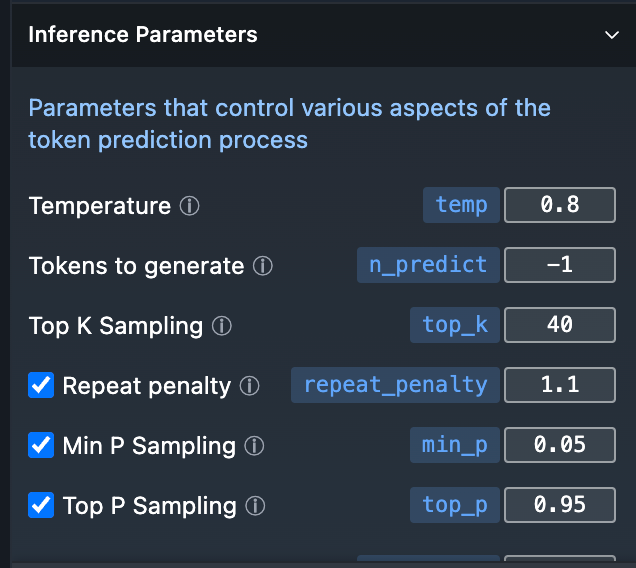

One of LM Studio’s standout features is its transparent display of inference metrics after each model response. As shown in the image, these metrics include time to first token, generation time, generation speed (tokens per second), stop reason, GPU layers used, CPU threads, whether memory-efficient inference (mlock) is enabled, and token count.

This real-time feedback is invaluable for users looking to optimise model performance or understand the underlying processes. For instance, you can see how different settings affect generation speed or how close you are to the context limit. This level of insight is rarely available in cloud-based AI services, making LM Studio an excellent tool for both learning about and fine-tuning LLM performance.

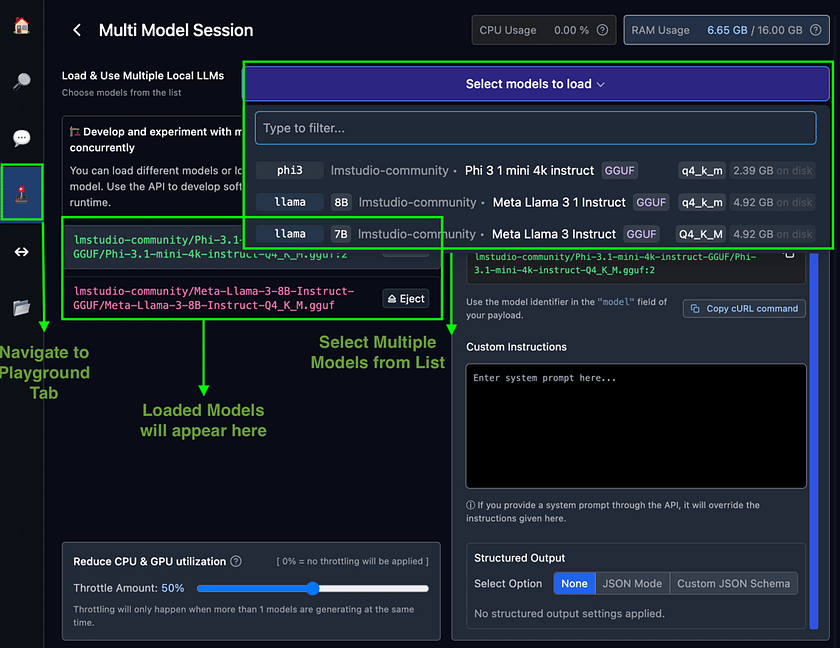

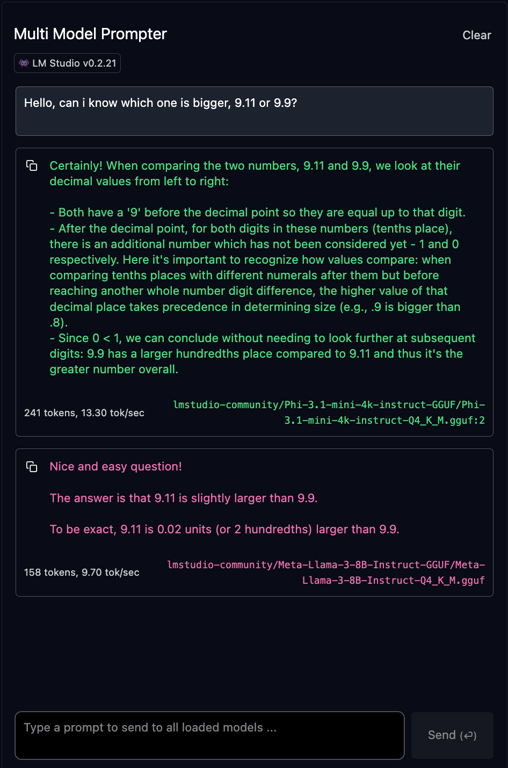

Working Example 2: Harnessing Multi Model Sessions

LM Studio’s Multi Model Sessions feature enables users to load and interact with multiple language models simultaneously, each with custom settings. This powerful capability allows for direct comparison of model outputs, efficient experimentation, and the creation of ensemble systems. Users can access these models via API or the Multi-Model Prompt UI, providing a unique environment for in-depth LLM analysis and leveraging the strengths of various models in a single session.

1. Accessing Multi Model Sessions

- Navigate to the Playground tab in LM Studio

- Look for the “Multi Model Sessions” section

2. Setting Up Models

- Click on “Select Models to Load” to select your first model

- Choose the model from your downloaded list

- Configure custom settings for the model (e.g., context length, GPU usage)

- Repeat this process to add additional models

3. Using the Multi-Model Prompt UI

- After models are loaded, access the Multi-Model Prompt UI

- Enter a prompt that you want to test across all loaded models

- Submit the prompt and observe the responses from each model

Multi Model Sessions in LM Studio offer a powerful suite of features designed for advanced LLM experimentation and comparison. This functionality allows for custom configurations per model, enabling performance optimisation tailored to each LLM’s characteristics.

The integrated API access facilitates programmatic interaction with multiple loaded models, while built-in resource management, including guardrails, ensures system stability. The Multi-Model Prompt UI enables real-time output comparison across different LLMs, and the session’s flexibility accommodates various model types, from instruction-tuned to base models, all within a single, efficient workspace.

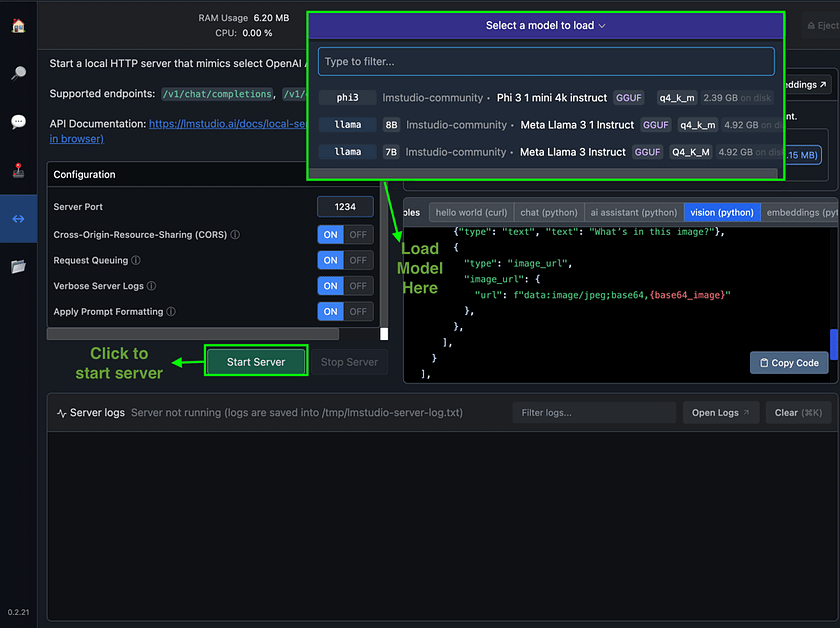

Working Example 3: Using LM Studio as a Local API Server

LM Studio’s local API server functionality transforms your personal computer into a powerful AI backend, offering OpenAI-compatible endpoints for seamless integration with existing tools and workflows. This feature enables developers to leverage local LLMs in their applications, providing greater control over data privacy, reduced latency, and cost-effective AI solutions without relying on cloud services.

1. Start the LM Studio Server

- Navigate to the Developer tab in LM Studio

- Locate the “Local Server” section

- Load a downloaded model

- Click “Start Server” and note the port number (typically 1234)

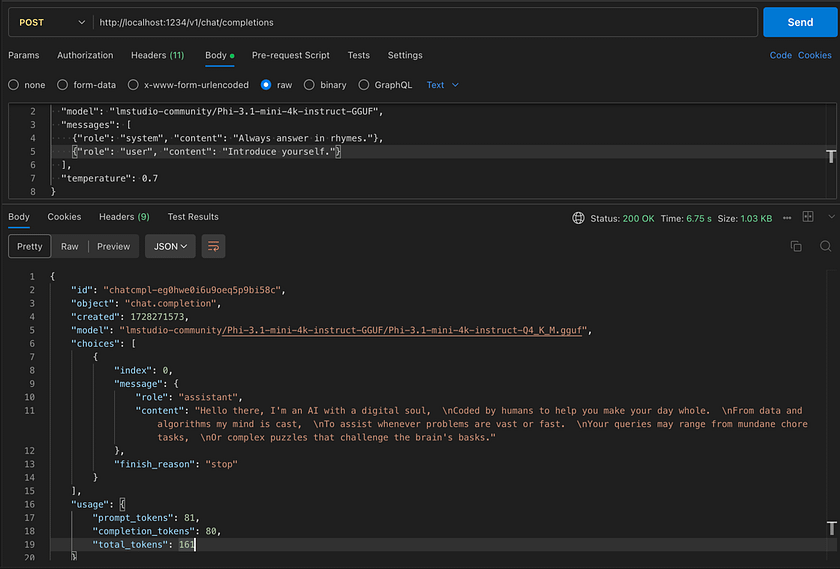

2. Prepare Your API Client

- Open Postman or your preferred API testing tool

- Set up a new request with the base URL: http://localhost:1234

3. Make an API Request

- Choose an endpoint (e.g., /v1/chat/completions)

- Set the request method to POST

- Add headers: Content-Type: application/json and Authorization: Bearer lm-studio

- In the request body, specify the model and your prompt:

{ "model": "lmstudio-community/Phi-3.1-mini-4k-instruct-GGUF", "messages": [ {"role": "system", "content": "Always answer in rhymes."}, {"role": "user", "content": "Introduce yourself."} ], "temperature": 0.7 }

4. Explore Other Endpoints

- Test /v1/models (GET) to list available models

- Use /v1/completions for text completion tasks

- Try /v1/embeddings to generate text embeddings

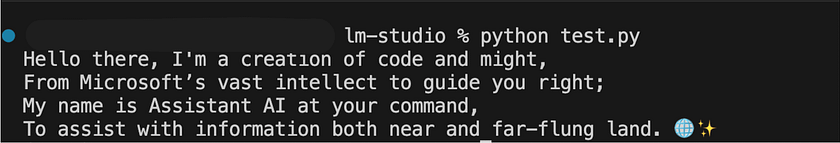

5. Integrate with Your Application

- Update your existing code that uses OpenAI’s API to point to your local server

- Adjust the base URL to http://localhost:1234

- Use your loaded model’s name instead of OpenAI model names

from openai import OpenAI

# Point to the local server

client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio")

completion = client.chat.completions.create(

model="lmstudio-community/Phi-3.1-mini-4k-instruct-GGUF",

messages=[

{"role": "system", "content": "Always answer in rhymes."},

{"role": "user", "content": "Introduce yourself."}

],

temperature=0.7,

)

print(completion.choices[0].message)

LM Studio’s local API server provides a robust platform for AI development and experimentation, offering OpenAI-compatible endpoints that support chat completions, text completions, and embeddings generation. This functionality allows developers to seamlessly transition from cloud-based services to local LLMs, enabling enhanced privacy, reduced costs, and greater flexibility in model selection and customisation. By exposing these capabilities through familiar API protocols, LM Studio bridges the gap between cutting-edge AI technology and practical application development, empowering users to build sophisticated AI-driven solutions entirely on their local machines.

Advanced Features and Customisation

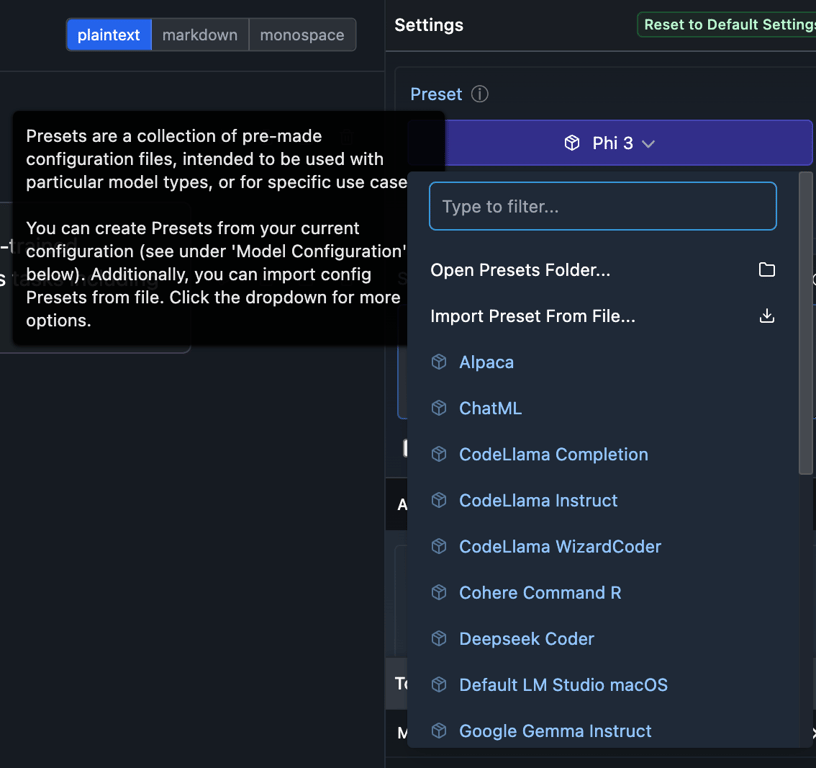

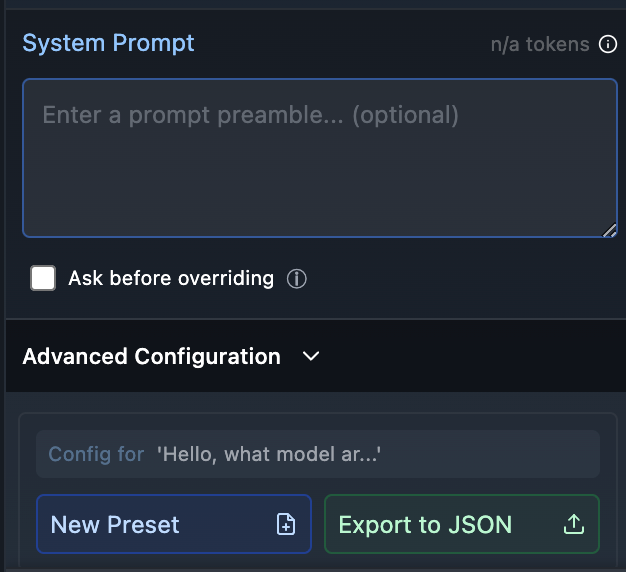

LM Studio offers a range of advanced features for power users and developers. Config presets allow you to save and quickly apply custom configurations for different use cases, streamlining your workflow when switching between tasks or models. These presets can include system prompts, inference parameters, and other settings, enabling you to fine-tune your LLM interactions with precision. Customising prompt templates provides even greater control over model behaviour, allowing you to tailor the input format to specific models or tasks, potentially improving the quality and relevance of generated responses.

For those seeking automation and scripting capabilities, LM Studio provides a powerful Command Line Interface (CLI) tool called ‘lms’. This tool enables users to perform a variety of operations from the terminal, including starting and stopping the LM Studio server, loading and unloading models, and even running inferences. The ‘lms’ CLI is particularly useful for integrating LM Studio into larger workflows or automated pipelines, making it an invaluable asset for developers looking to incorporate local LLM capabilities into their projects or research endeavours.

Conclusion

LM Studio empowers users to harness the potential of local LLMs through its user-friendly interface, multi-model support, and OpenAI-compatible API. As AI continues to evolve, local LLM experimentation becomes increasingly vital for innovation, privacy, and personalised solutions. LM Studio provides an accessible yet powerful platform for this emerging frontier. Whether you’re an AI enthusiast, developer, or researcher, LM Studio offers the tools to explore, experiment, and innovate with LLMs. Take the plunge into local AI development — download LM Studio and unlock the potential of LLMs on your own machine today.

References

- LM Studio. Documentation. View Docs.

Catch the latest version of this article over on Medium.com. Hit the button below to join our readers there.